L. E. Johnston1, R. K. Ghanta1, B. D. Kozower1, C. L. Lau1, J. M. Isbell1 1University Of Virginia,Division Of Thoracic And Cardiovascular Surgery,Charlottesville, VA, USA

Introduction: Measurement of healthcare quality is rapidly moving from the hospital level to the provider level, with two recent initiatives presenting surgeon rankings based on Medicare data. Previous studies have demonstrated significant differences between hospital ranking systems. We hypothesize that there will be similar differences between surgeon ranking systems, and that hospital specialty ranking will not correlate with the average ranking of its surgeons in a particular specialty.

Methods: The U.S. News & World Report (USNWR) hospital scores in orthopedics, urology, gastroenterology & GI surgery, and cardiac care areas were selected as the basis for comparison. The USNWR scores were averaged, and the top 10 hospitals in each specialty were ranked based on the mean score. Hospitals included in this list were identified in ProPublica’s (PP) Surgeon Scorecard and Consumer’s Checkbook’s (CC) SurgeonRatings.org websites. Both sites analyze physician outcomes based on Medicare billing data from 2009-2013 (PP) or 2009-2012 (CC), and USNWR scores incorporated Medicare data from the same time-frame. For each website we recorded each surgeon's score by specialty area, and these scores were then averaged to generate the mean surgeon score at an institution. The mean surgeon score was then used to rank institution-level performance in order to examine the correlation between the PP and CC rating systems, as well as between each of the two rating systems and USNWR.

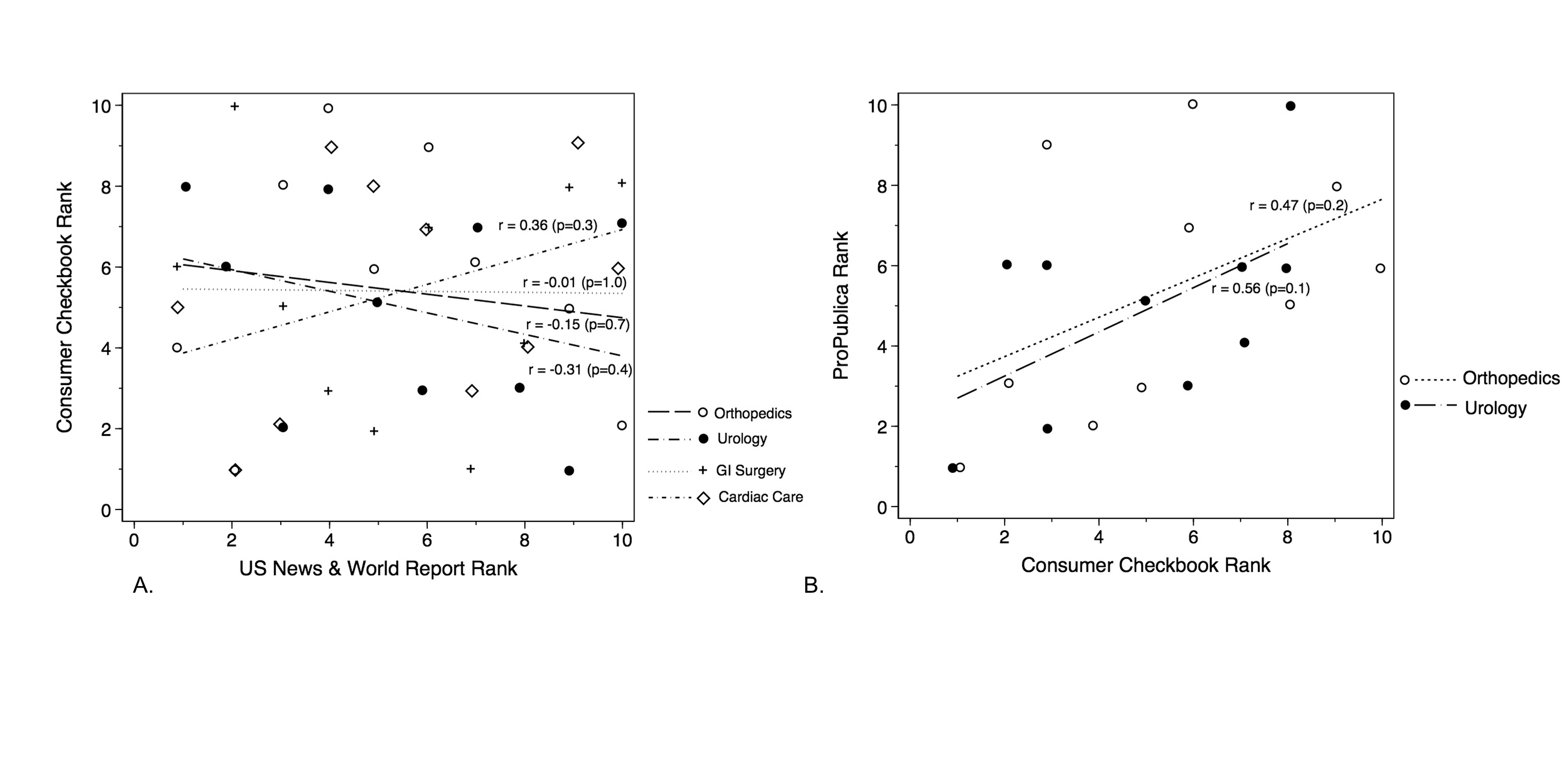

Results: Compared to averaged USNWR institution rankings in orthopedics and urology, PP rankings had correlation coefficients of 0.33 (p=0.4) and -0.59 (p=0.1). CC ranking correlations were -0.15 (p=0.7) for orthopedics, -0.31 (p=0.4) for urology, -0.01 for GI surgery (p=1.0), and 0.36 (p=0.3) for cardiac care compared to USNWR (Figure, panel A). Comparing PP rankings to CC rankings in orthopedics and urology, the correlation coefficients were 0.47 and 0.56 respectively (Figure, panel B). There was no consistent relationship between number of surgeons/procedures ranked and the institutional rankings.

Conclusion: Medicare data used to generate surgeon rankings produces inconsistent estimates depending on methodology. Average surgeon rankings in a specialty do not correlate well with hospital rankings using either of the publicly available surgeon ranking tools. Current ranking systems produce highly variable results that may confuse rather than inform healthcare consumers, and may inappropriately incentivize surgeons to operate only on lower-risk patients in order to improve their ratings.