A. Chandrasekaran1, L. Sharma1, M. Gadkari1, P. Ward1, T. Pesavento1, M. Hauenstein1, S. Moffatt-Bruce1 1Ohio State University,Surgery,Columbus, OH, USA

Introduction: This research investigates the effect of a newly developed patient-centric standardized discharge process on patient anxiety upon discharge and 30-day readmissions for kidney transplant recipients. Inconsistencies in information delivery during patient discharge is generally blamed for high number of 30-day readmissions among kidney transplant recipients. A standardized discharge process comprises activities that have clearly defined content, sequence, timing and outcome. In this research, a new standardized discharge process was developed through the participation of nurses performing discharge planning in five structured workshops, and supplemented with inputs from patients. We collect and analyze pre- and post- process standardization patient data to understand the efficacy of the new discharge process.

Methods: This is a three year study and we are currently in year 2. In year 1, we collected pre-intervention data (qualitative and quantitative) from over 100 kidney transplant recipients at a major kidney transplant center in the United States. In addition, we shadowed nurses during the delivery of discharge instructions and collected data on their job satisfaction. In Year 2, we conducted five structured workshops involving inpatient and outpatient nurses (with inputs from patients) to design and develop a standardized discharge process. We also standardized various teaching aids, IT systems and patient-books to reflect the newly developed standards. These new standards were adopted starting July 27, 2015. At this time, we also initiated continuous improvement activities (using morning huddles) in these units to discuss the implementation and further improvement of discharge procedures. In year 3, we will collect patient data on readmissions and quality of life upon discharge as well as data from nurses on their quality of work. Comparisons will be made with the pre-intervention data.

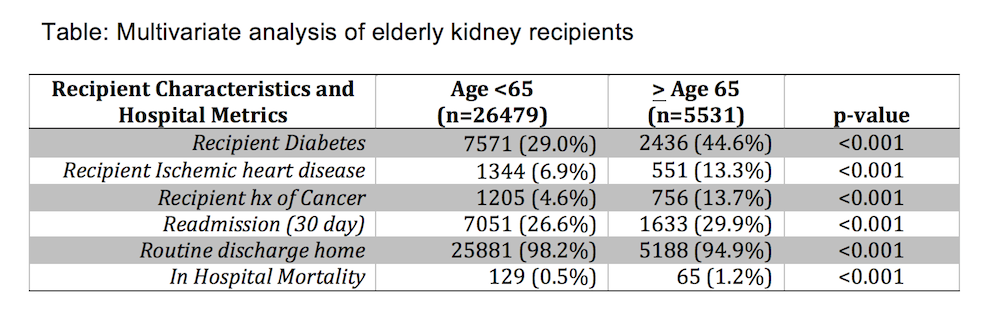

Results:Preliminary results using pre-intervention data from 100 patients indicate that patient anxiety one week following discharge strongly predicts the occurrence of readmissions. Readmission rates prior to our intervention were approximately 27%. Specifically, the odds of getting readmitted increase by 38% for a one unit increase in anxiety levels of the patient after discharge. We also found that patient anxiety levels primarily depend on both having a standardized discharge process and empathy shown during their hospital stay. We are in the process of collecting patient and caregiver data after implementation of the new standardized discharge process. We expect that patient anxiety levels, readmissions, and caregiver job satisfaction will show improvements as a result of the intervention.

Conclusion:Our study aims to result in an efficient, patient-centric discharge process that will improve kidney transplant patient discharge experiences and higher compliance with discharge instructions resulting in reduced readmissions.